Wei Wei

A CS MSc Student in utouch, ilab, University of Calgary.

A radical designer.

An enthusiastic programmer.

A mediocre gamer.

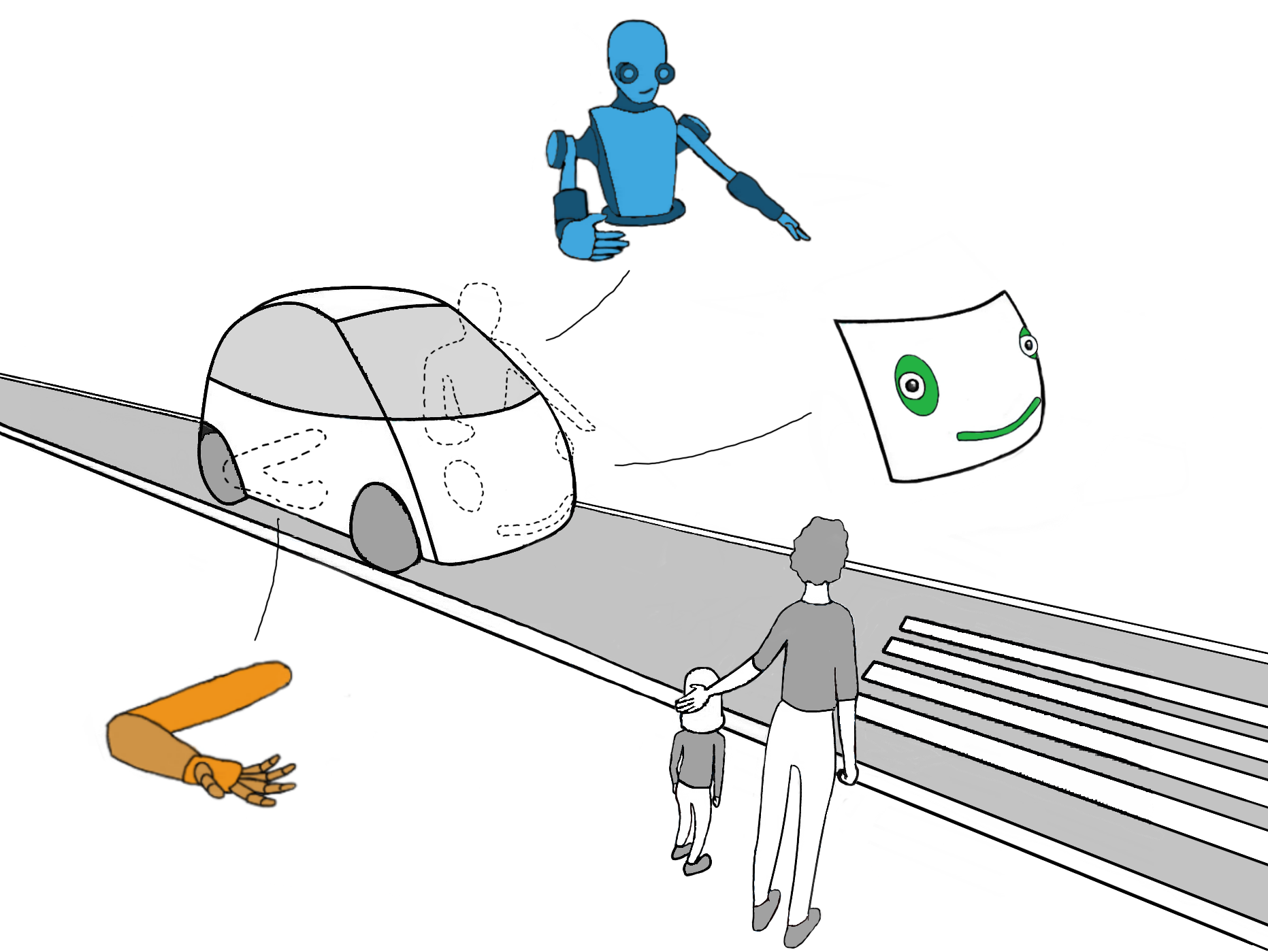

Anthropomorphizing AVs: Leveraging Human Cues for Designing AV-Pedestrian Interactions

Expressions, gestures, and body language are fundamental to human communication. Our work explores their potential benefits and limitations within Autonomous Vehicle (AV)-pedestrian interactions. We designed three anthropomorphic interfaces for AVs: facial expressions, hand gestures, and humanoid torsos. With these designs, we contribute a preliminary design critique to understand the strengths and weaknesses of specific anthropomorphic interfaces, a realization of these AV interfaces in an immersive VR testbed which allows pedestrians to make physical crossing decisions, and an evaluation of our testbed through a user study. Our findings suggest that AV interfaces with a higher degree of human likeness receive more favourable responses from pedestrians. Additionally, interfaces which incorporate intuitive, dynamic, and unambiguous features outperform others. Our results also highlight that some anthropomorphic AV interfaces may be efficient while not necessarily guaranteeing a high level of pedestrian comfort.

Under review

Touch and Beyond: Comparing Physical and Virtual Reality Visualizations

We compare physical and virtual reality (VR) versions of simple data visualizations. We also explore how the addition of virtual annotation and filtering tools affects how viewers solve basic data analysis tasks. We report on two studies, inspired by previous examinations of data physicalizations. The first study examined differences in how viewers interact with physical hand-scale, virtual hand-scale,and virtual table-scale visualizations and the impact that the different forms had on viewer's problem solving behavior. A second study examined how interactive annotation and filtering tools might sup-port new modes of use that transcend the limitations of physical representations. Our results highlight challenges associated with virtual reality representations and hint at the potential of interactive annotation and filtering tools in VR visualizations.

Danyluk, Kurtis, Teoman Tomo Ulusoy, Wei Wei, and Wesley Willett. "Touch and Beyond: Comparing Physical and Virtual Reality Visualizations." IEEE Transactions on Visualization and Computer Graphics (2020).

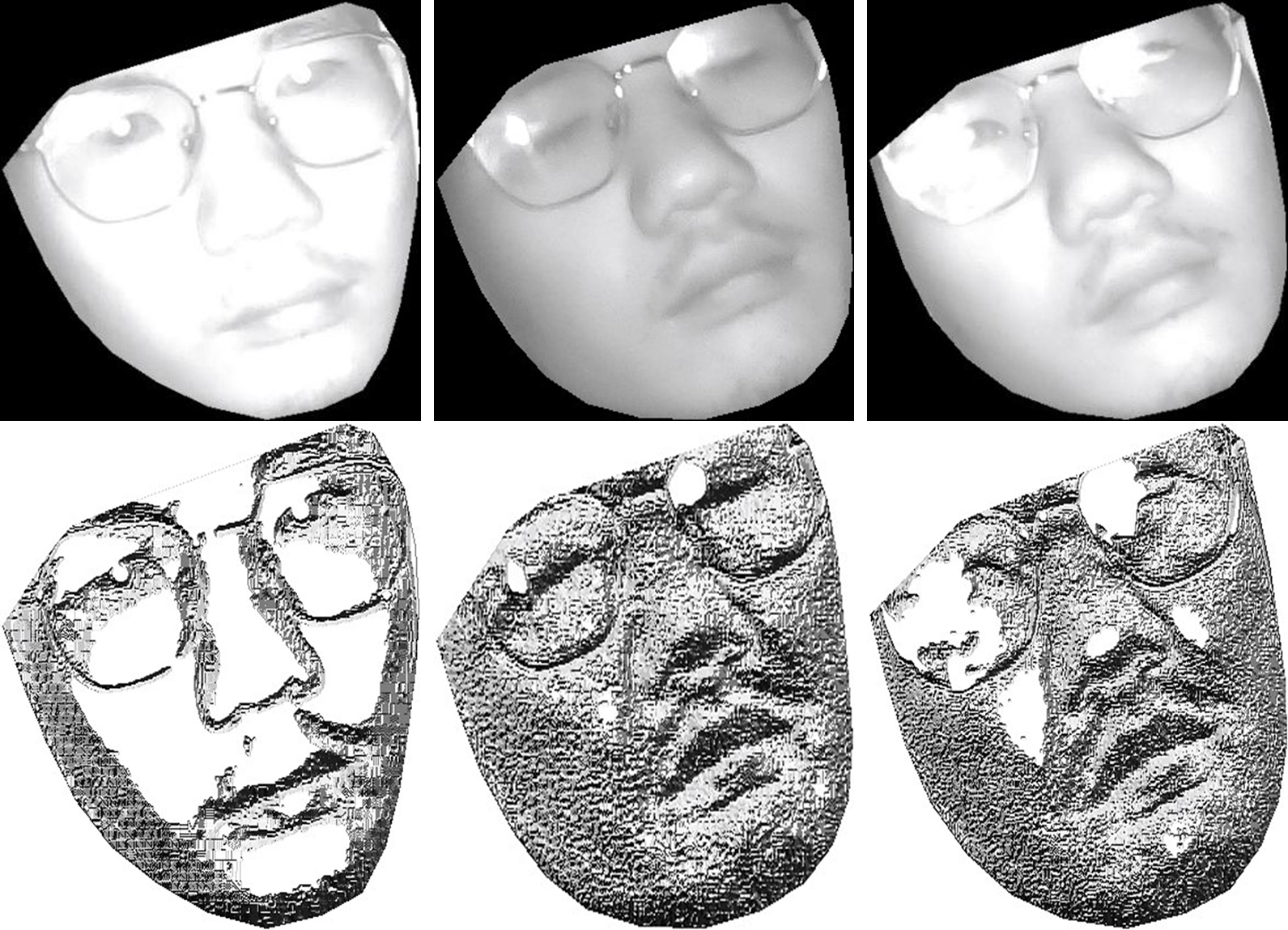

Living Body Detection Technology Based on Infrared Camera

Utilizing the advantages of infrared camera, this work explored how to use support vector machine (SVM) and convolutional neural network (CNN) to resist paper photo attacks in living body detection scenario. The work focuses on the use of infrared images to suppress the influence of ambient light and more clearly reflect the contours and skin texture information of the face. For SVM, this work focuses on the characteristics of infrared images, using local binary mode and gray level co-occurrence matrix to extract the texture features in face images as feature values, and then use SVM to perform binary classification. For CNN, my work uses the current deep learning framework (keras and Tensorflow) to train and test the models to achieve living body detection.

Undergraduate Thesis

Danyluk, Kurtis, Teoman Tomo Ulusoy, Wei Wei, and Wesley Willett. "Touch and Beyond: Comparing Physical and Virtual Reality Visualizations." IEEE Transactions on Visualization and Computer Graphics (2020).

Address: MS 680, ilab, University of Calgary, 2500 University Dr NW Calgary, Alberta T2N 1N4, Canada

Email: weiwei2@ucalgary.ca